|

I am a second year Computer Science Ph.D. student at NYU, Courant. I am advised by Lakshmi Subramanian . Previously, I worked as a Research Fellow at Microsoft Research, India (MSRI), where I was advised by Dr. Manik Varma and Dr. Amit Sharma. Here I closely worked with the Ads Recommendation Team under Wenhao Lu and Ahskay Soni. I graduated from Indian Insitute of Technology (IIT) Delhi with a Bachelor's in Mechanical Engineering and a minor degree in Computer Science. During my undergraduate, I worked with Prof. Chetan Arora. Email / CV / Google Scholar / Twitter / Github |

|

|

Summer 2025

Applied Scientist Intern @ Amazon

Working on pretraining foundational large language models Sept 2024

Joined NYU Courant

Started Ph.D. in Computer Science 2022 – 2024

Research Fellow @ Microsoft Research India

Worked on extreme classification and recommendation systems |

|

My research focuses on understanding and improving how large language models reason and behave. I'm particularly interested in agentic memory systems that enable persistent, context-aware reasoning, rule-following behavior to ensure models reliably adhere to instructions and constraints, and how knowledge is intrinsically represented within model weights. I also explore architectural innovations that can make these capabilities more robust and scalable. |

|

|

Ananth Balashankar, Ankit Bhawdwaj, Neelabh Madan+, Thomas Wies, Lakshmi Subramanian , Under Review: CLeaR 2026 |

|

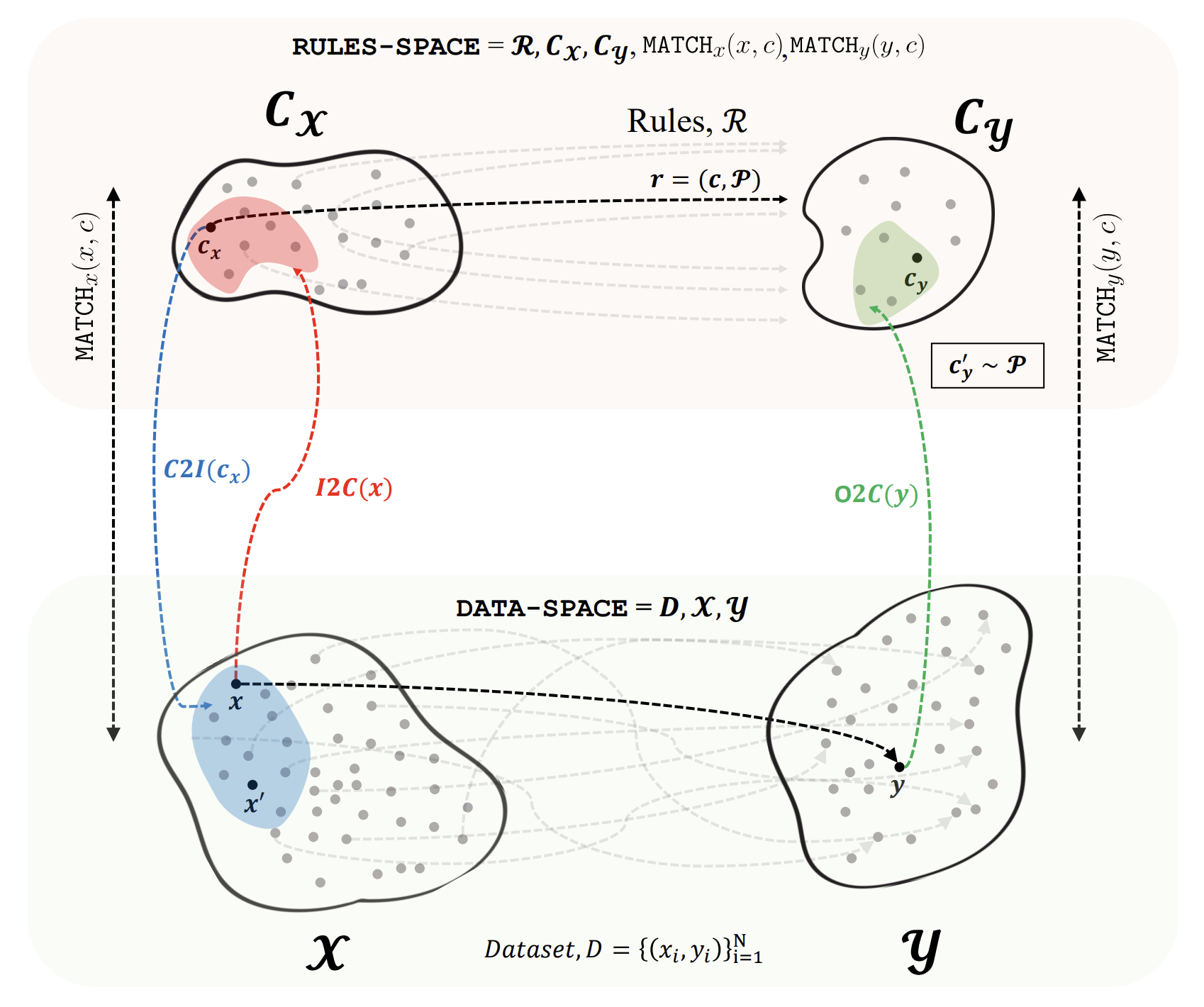

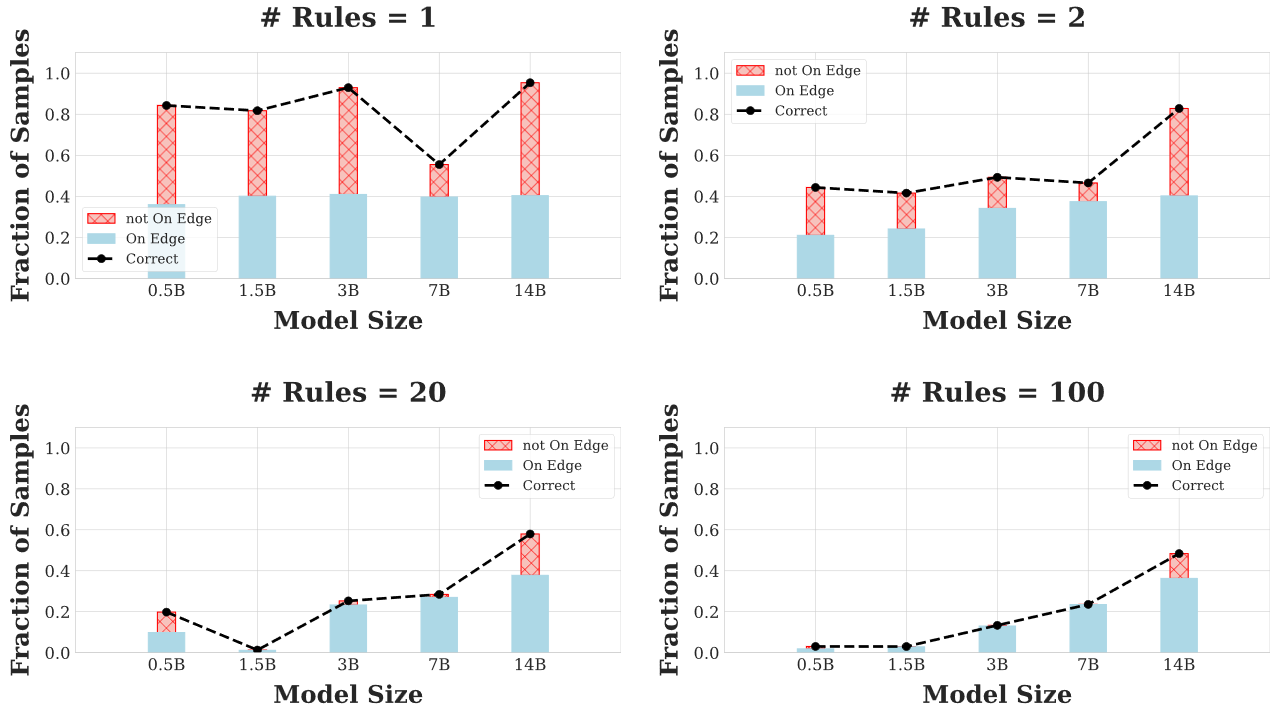

Neelabh Madan*, Lakshminarayanan Subramanian ICML Workshop MoFA 2025 [Poster] Paper |

|

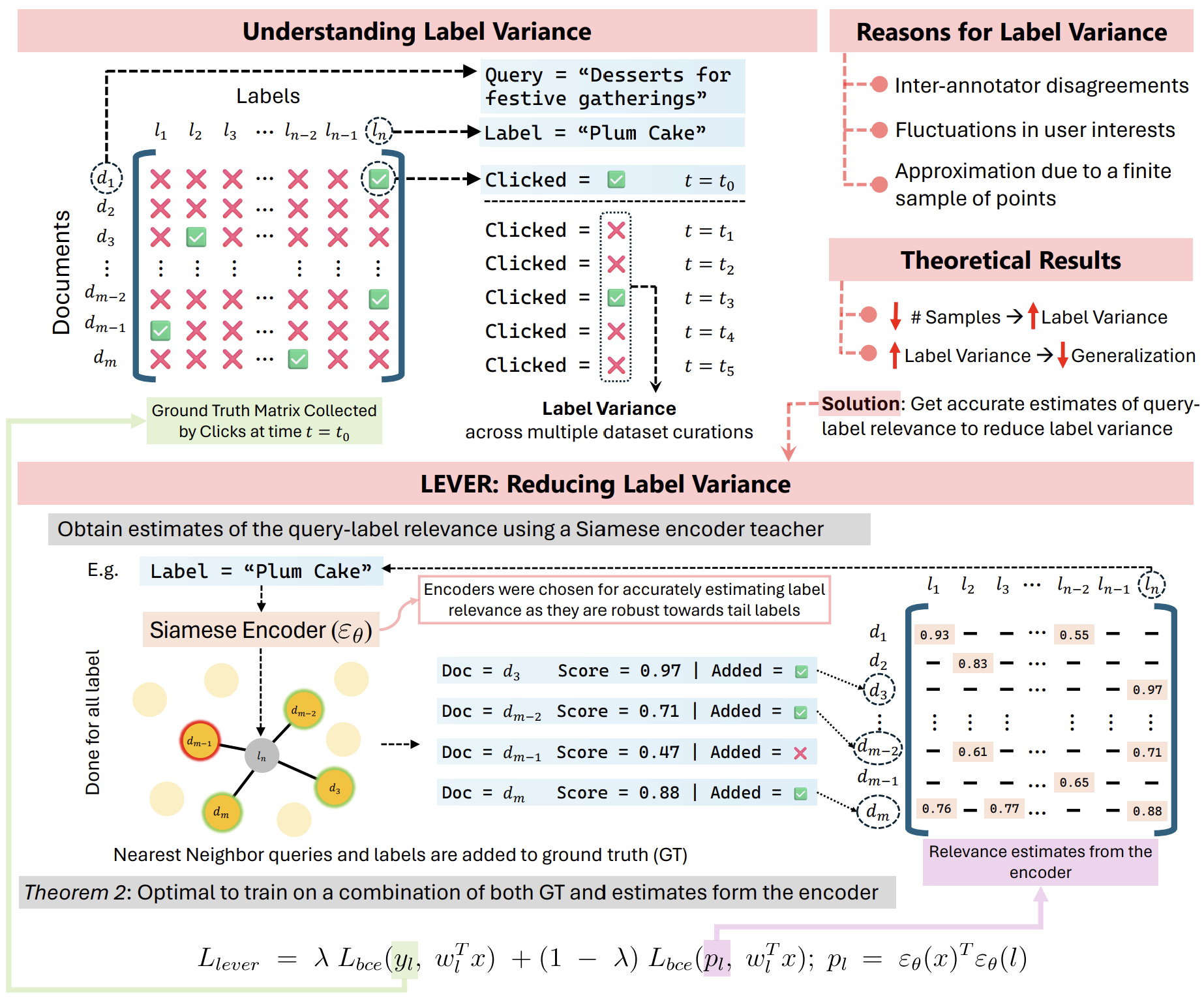

Anirudh Buvanesh*, Rahul Chand*, Jatin Prakash*, Bhawna Paliwal, Mudit Dhawan, Neelabh Madan, Deepesh Hada, Yashoteja Prabhu, Manik Varma, ICLR 2024 Paper / Code |

|

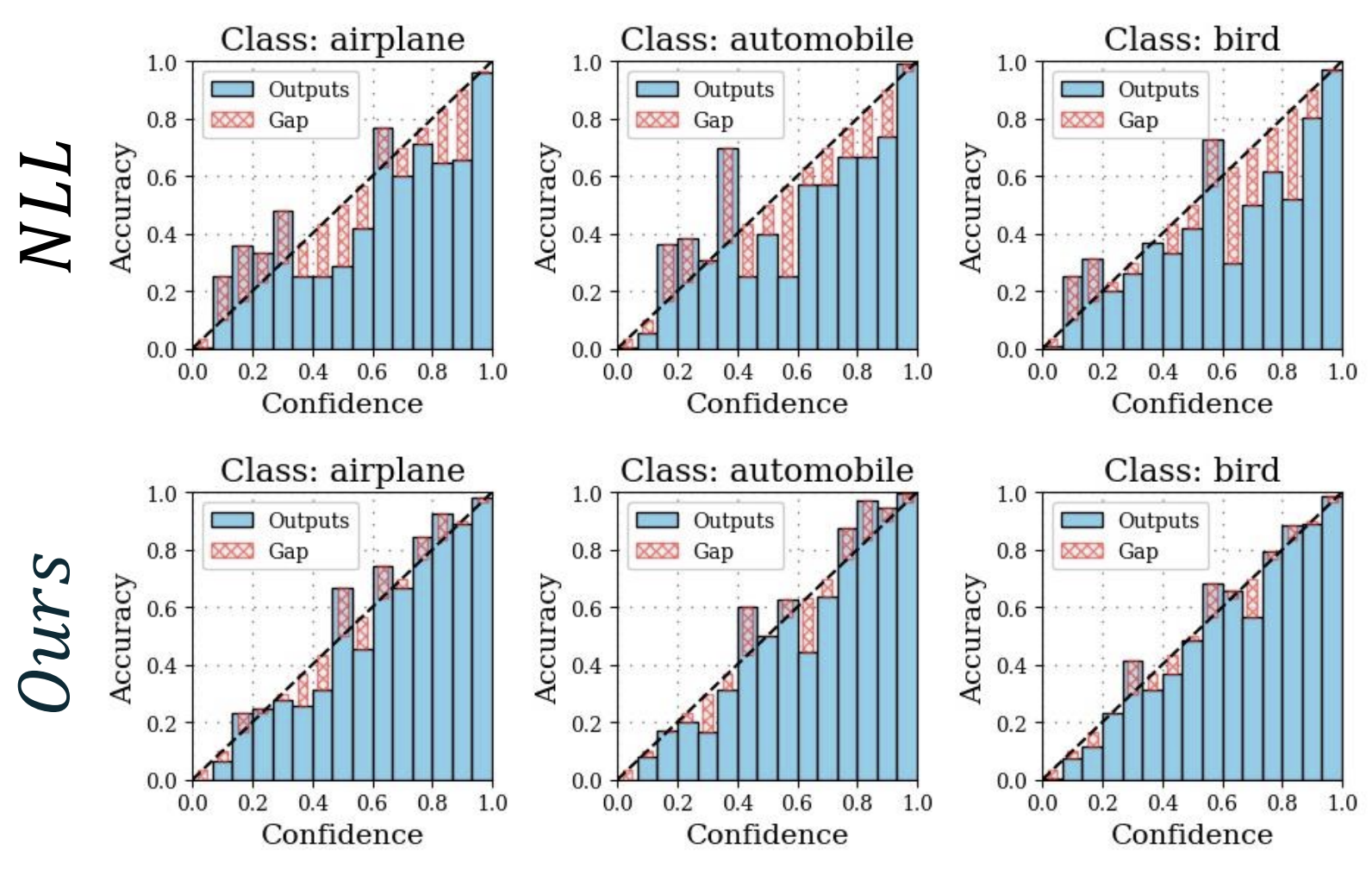

Neelabh Madan*, Ramya Hebbalaguppe*, Jatin Prakash*, Chetan Arora CVPR 2022 Oral (4.2% acceptance rate) Paper / Code |